So, the two most common decoders for language generation used to be greedy-decoding (GD) and beam-search (BS). [1/9]

Beam-search: to mitigate this, maintain a beam of sequences constructed word-by-word. Choose best at the end [2/9]

But interesting developments happened in 2018/2019 [3/9]

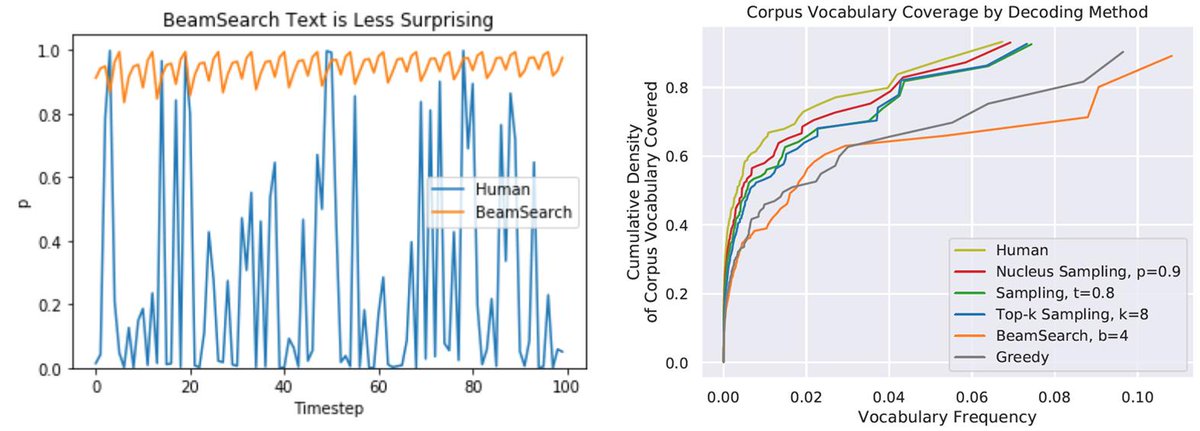

Clearly BS/greedy fail to reproduce distributional aspects of human text [7/9]

General principle: at each step, sample from the next-token distribution filtered to keep only the top-k tokens or the top tokens with cumulative prob above a threshold [8/9]

gist.github.com/thomwolf/1a5a2…

[9/9]