Rust and C++ use explicit memory management. There’s no GC. We want to write components in these languages for speed, and hand out nice JS objects to JS users, without forcing them to free memory explicitly.

What the heck, you read this far

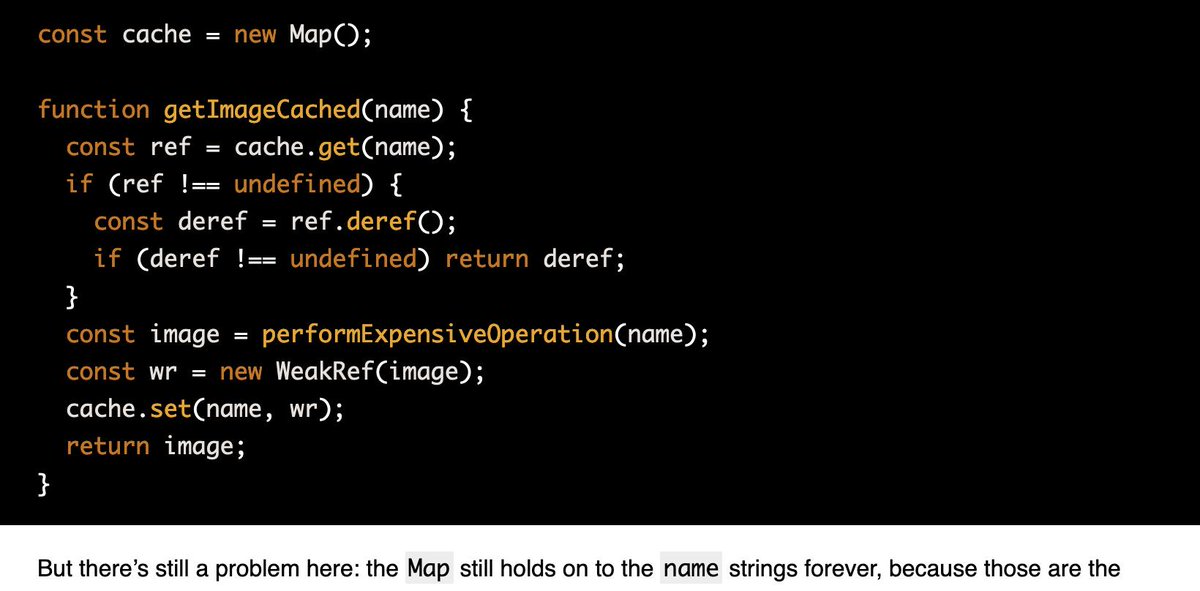

1. Please remember that nondeterminism is bad for fixing bugs, and don’t use WeakRefs (not that you’ll necessarily know if your dependencies use them, but we can all try)