It's was a big week, and in this thread I'll summarize what I learned there :-)

There was 13k attendees this year!

All big players were here. Only @nvidia was missing.

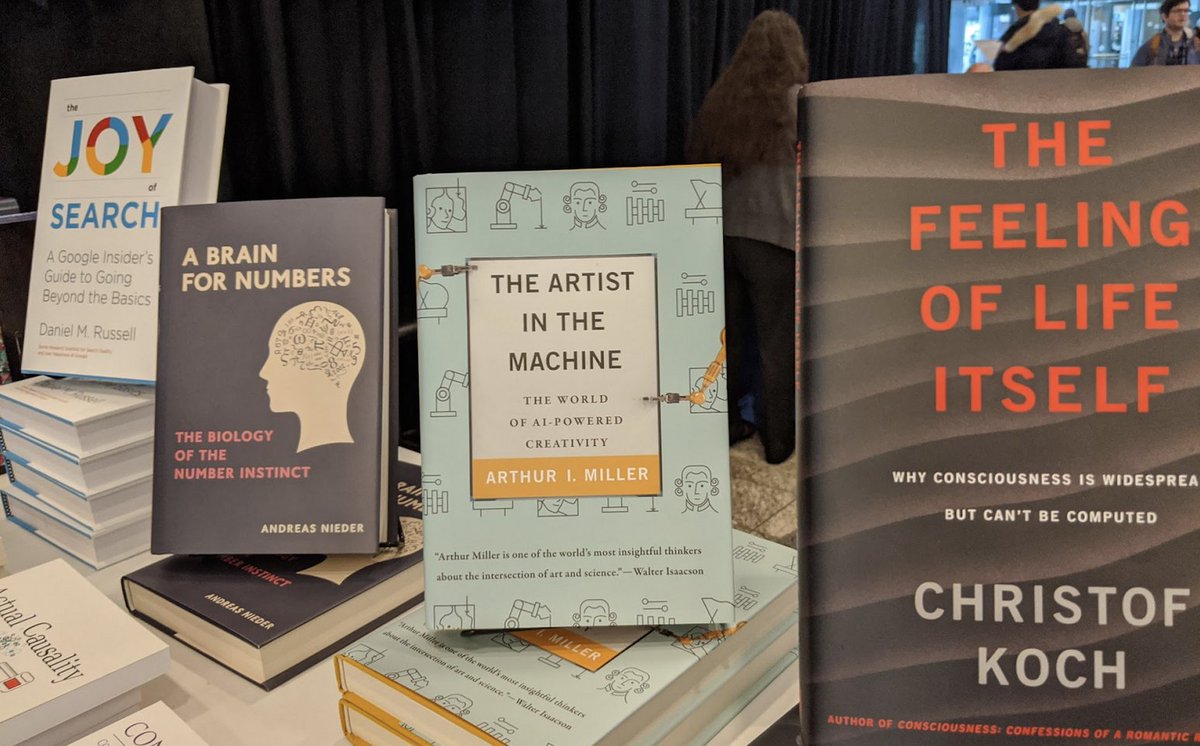

It was fun to see @ArthurIMiller at the @mitpress booth.

mitpress.mit.edu/books/artist-m…

I recomend to watch it, if you are interested by the theoretical basis of RL:

slideslive.com/38921493/reinf…

Because of the quality of her research (about how humans form beliefs), and also because of her #metoo statement.

Everyone in the room was impressed & inspired.

#StrongWomen #Courage

Like last year it helps to fight stereotypes just by showing that you can fill a *HUGE* space with excellent research done by women and black people.

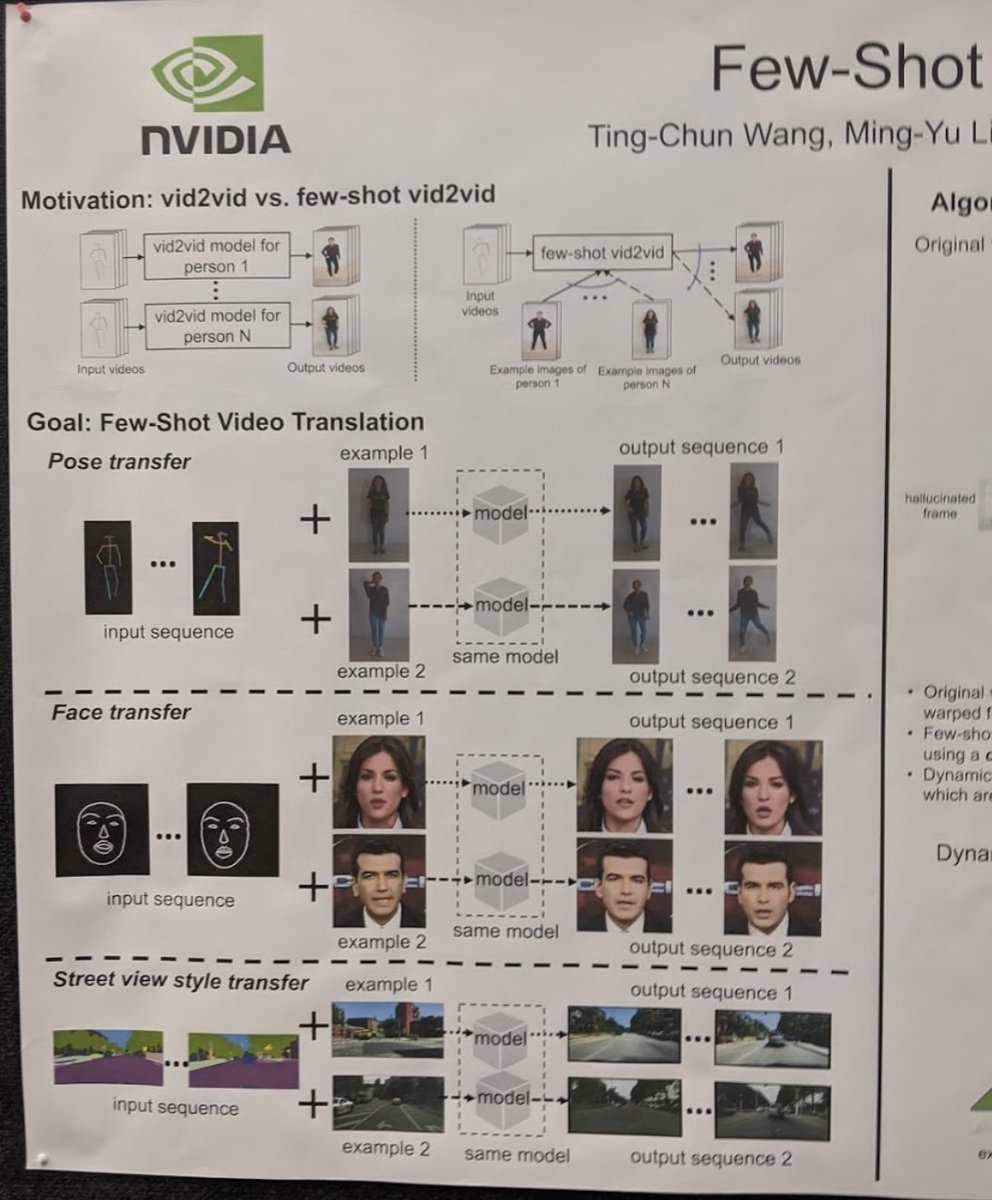

@NvidiaAI Nvidia presented their Few Shot video translation, following their vid2vid from last year.

You can watch it here: slideslive.com/38921748/socia…

“Every exponential is always the first part of a sigmoid”

👍👍👍👍👍👍👍👍👍👍👍👍👍👍👍👍👍👍

Take that in your face Singularity. 🤣

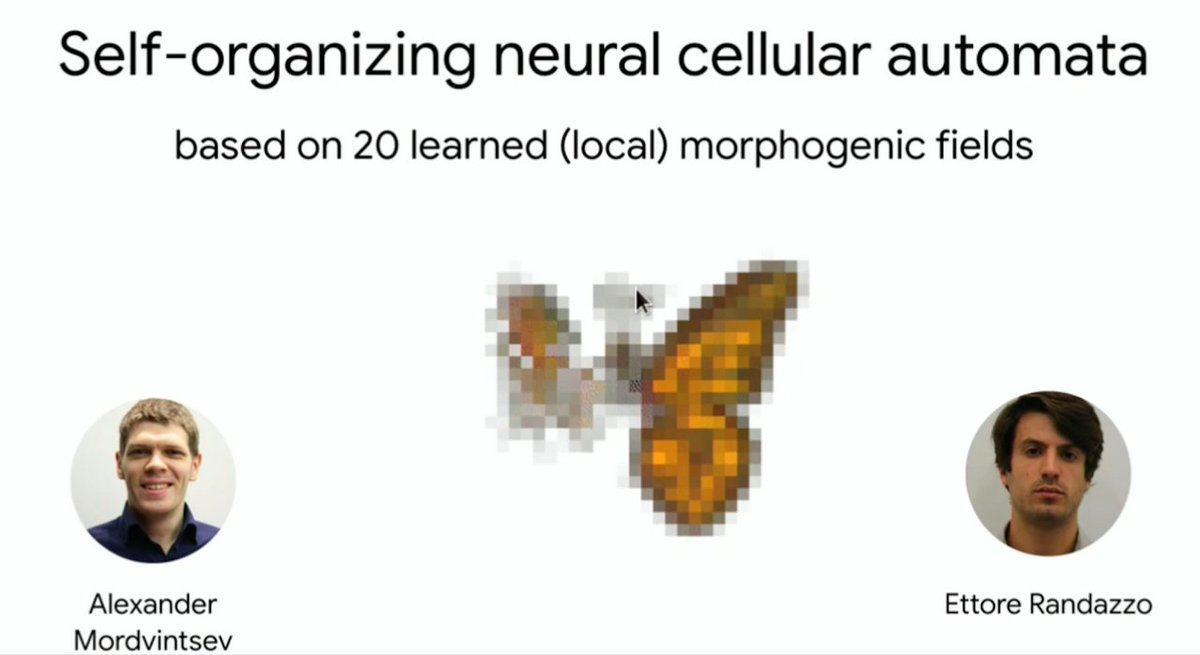

Think of game of life where the rule are neural based & learned.

Then imagine that you draw a digit, and each cell color itself to predict witch digit it is. It converge so at the end the digit has the right color

The Artificial Hear from @idivinci won the “Best demo award”

It is a cool #dataviz of a neural network witch analyses music that is also able to synthesize audio.

More info here:

vincentherrmann.github.io/demos/immersio…

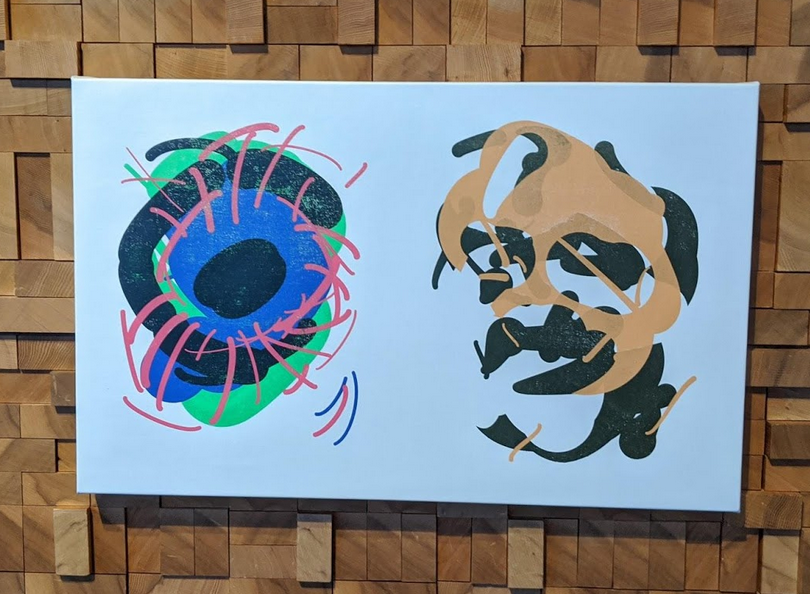

They created a virtual artist that draws portrait after interview you.

More info there: ivizlab.org/research/ai_em…

This is the painting it did of me:

@NvidiaAI unveiled #StyleGAN2!

The best image generator so far.

github.com/NVlabs/stylega…

It don’t take long until @quasimondo start exploring it:

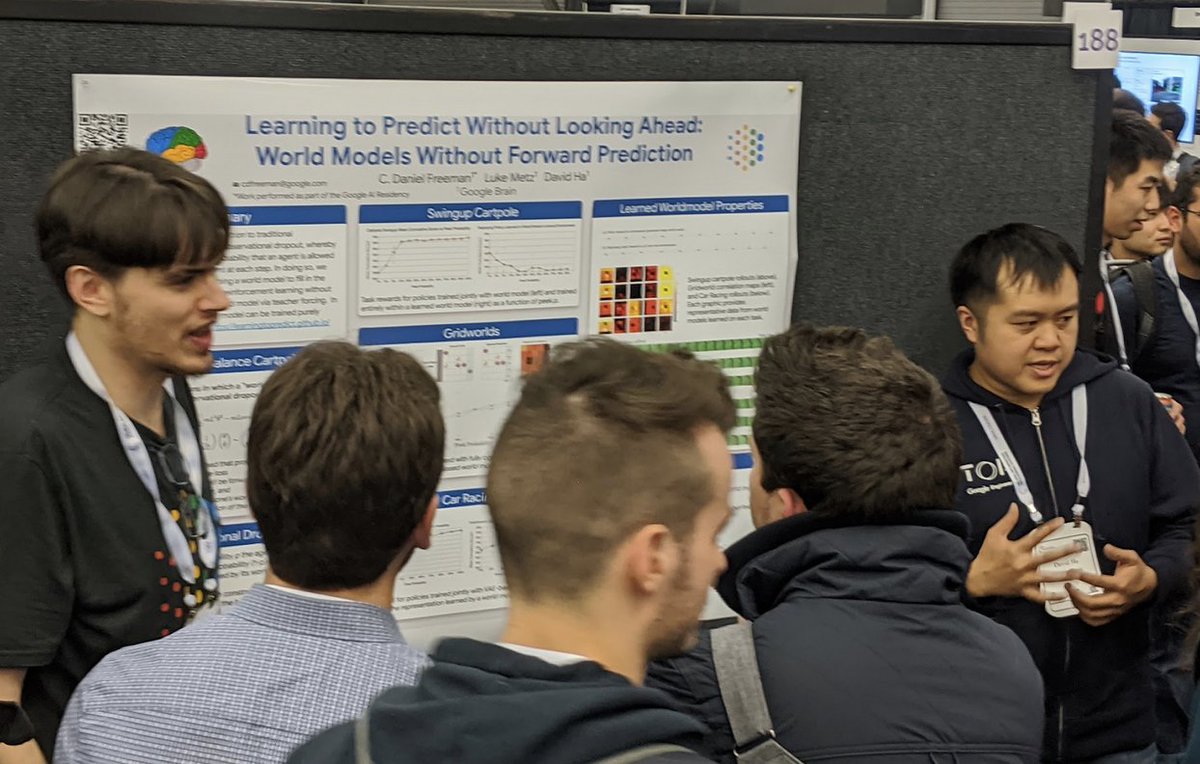

He shows that a predictive model can emerge if an agent can’t observe all time step, so have to guess the state from time to time. Optimization is only done based on the reward, with no direct comparison to the actual state

Organized by:

@elluba, @sedielem, @RebeccaFiebrink, @ada_rob, @jesseengel, @dribnet, @mcleavey, @pkmital, @naotokui_en

Full program here:

neurips2019creativity.github.io

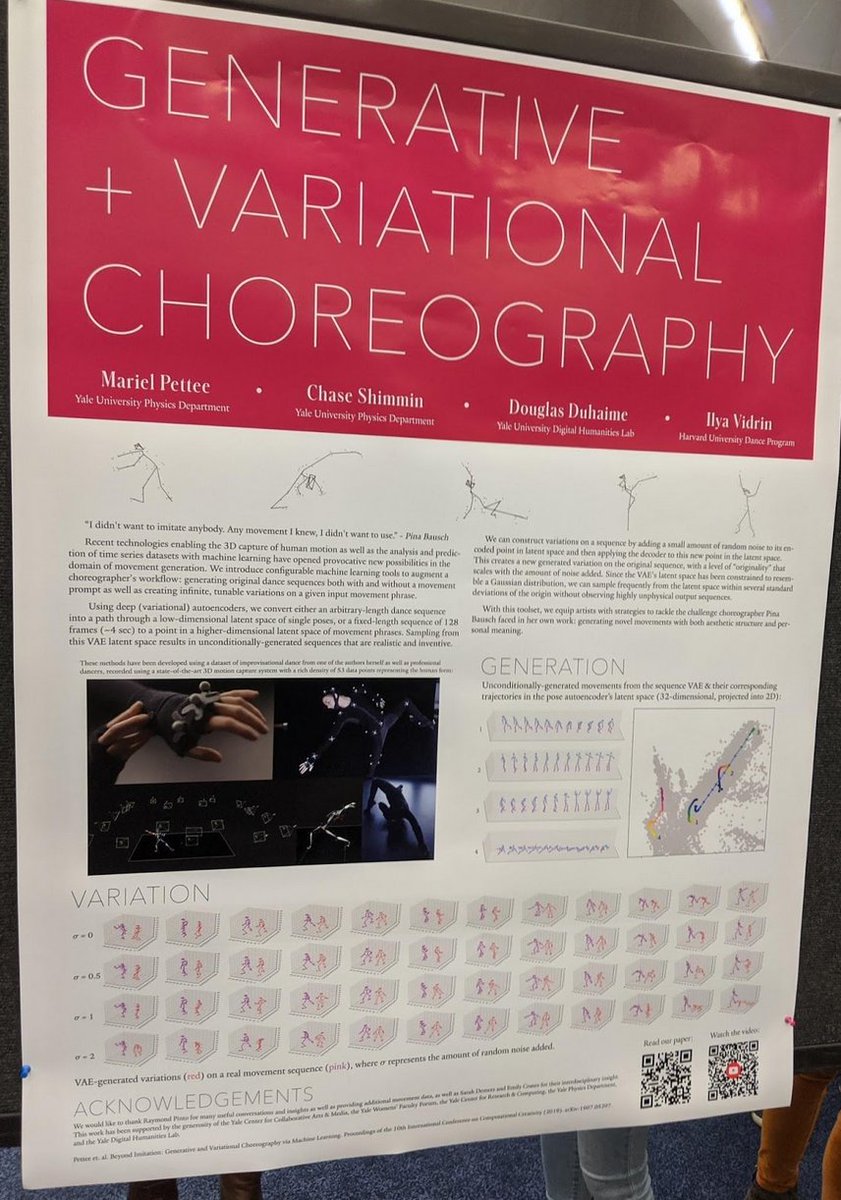

Everything was great:

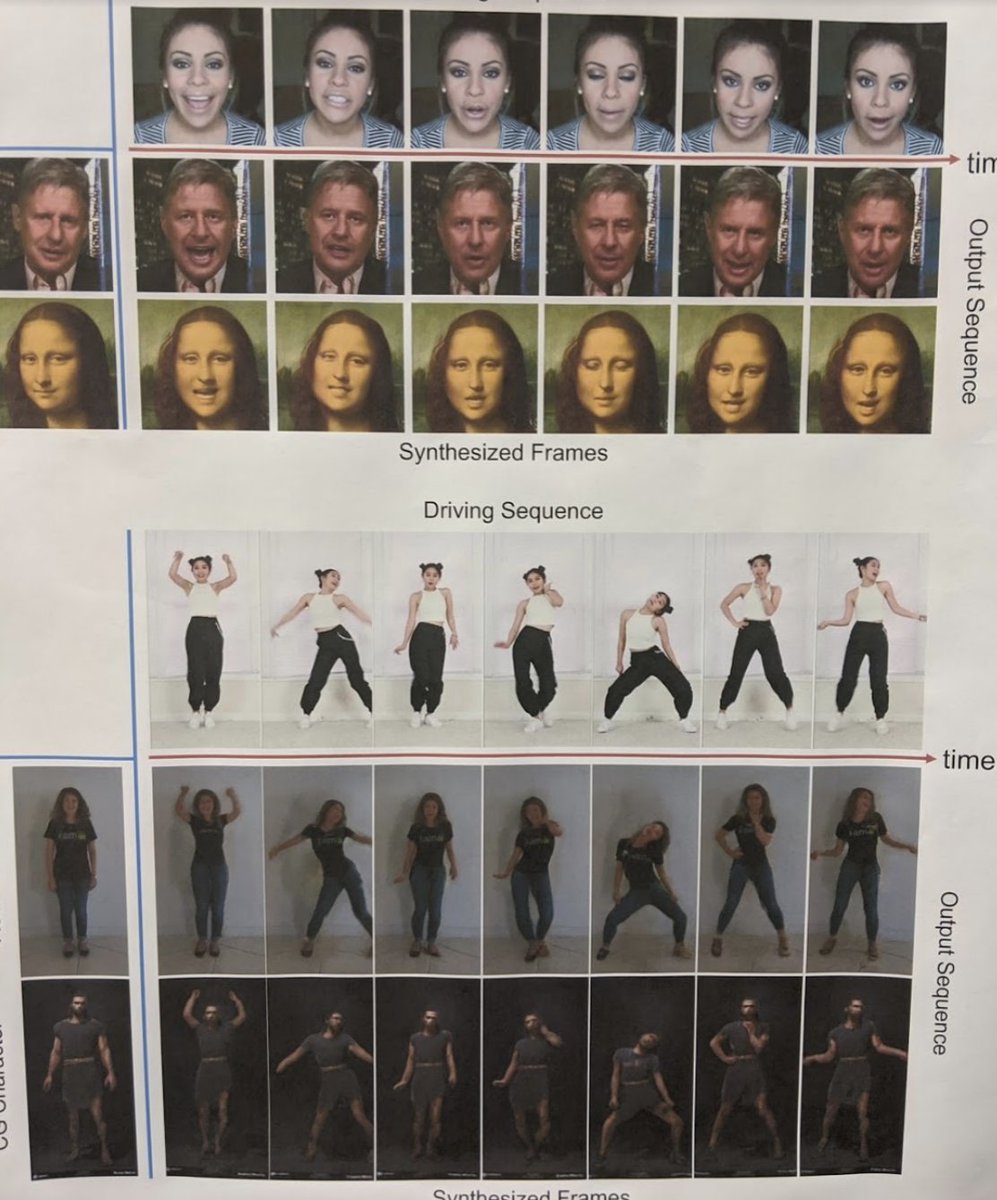

1) Pb is not politics, it’s making non consensual video targeting women.

2) Even if a video is not fake, someone can claim it is, there is no proof anymore

3) Analysing sensor footprint vs GAN footprint can work to detect fake

You can earn 1M$ if you find a way to detect fake

More here: ai.facebook.com/blog/deepfake-…

More info here:

bbc.com/future/gallery…

You can play with it here: midi-me.glitch.me

neurips2019creativity.github.io

One song SCATTERHEAD is on YT:

They decided they could not add notes, add harmonies, Jam or improvise.

But they could choose the instruments, transpose melodies or cut them.

slideslive.com/38922087/neuri…

The first time I was 😂 in a ML conf.

Thanks @improbotics

#feelthelearn

And like last year @dribnet was selling amazing artwork.

You can buy some of them there here too: dribnet.bigcartel.com

slideslive.com/38922086/neuri…

slideslive.com/38922087/neuri…

slideslive.com/38922088/neuri…

slideslive.com/38922089/neuri…

1) End to end learning lost it’s magical aura.

2) Adding prior to a network is the now cool, by selecting the right architecture.

3) Concept of regrets (how much an agent could have done better) is trending.

NLP is having the same revolution than Computer vision had in 2010.

medium.com/@thresholdvc/n…

The industry will need at least a decade to take advantage of all the research done so far.

I can’t name them all here, but that’s really the reason why you want to attend in person.

That's all folks!

And If you want to read my last year thread, it's there: