Expect him to call for banning strong #encryption, as he did last July—but this time, via amending 230 and focused on "protecting the children"

My take: techdirt.com/articles/20200…

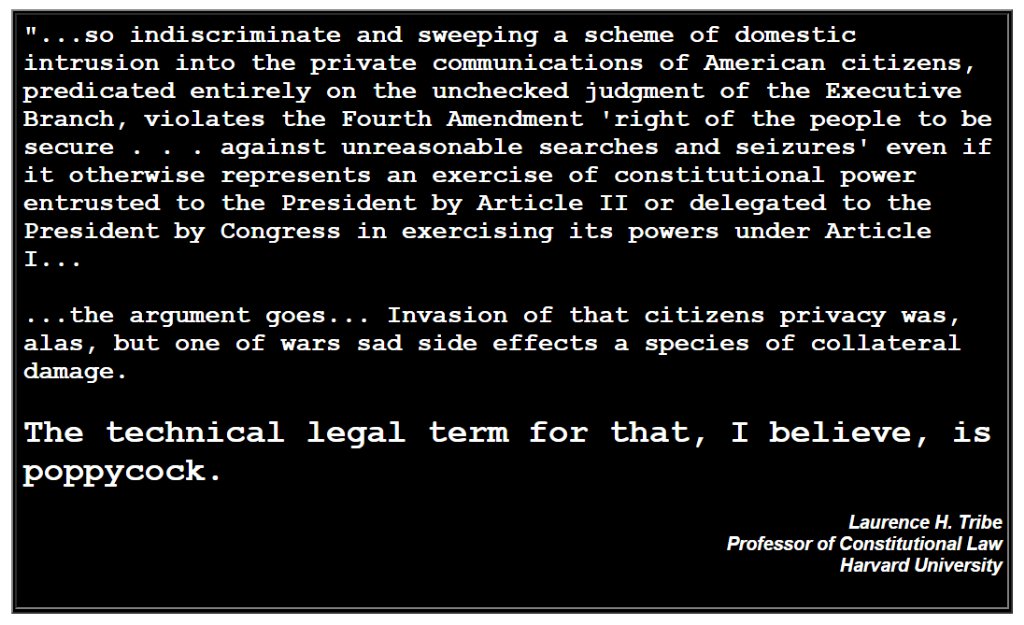

And... "Damn it, Jim, I'm a lawyer, not a cryptographer!"

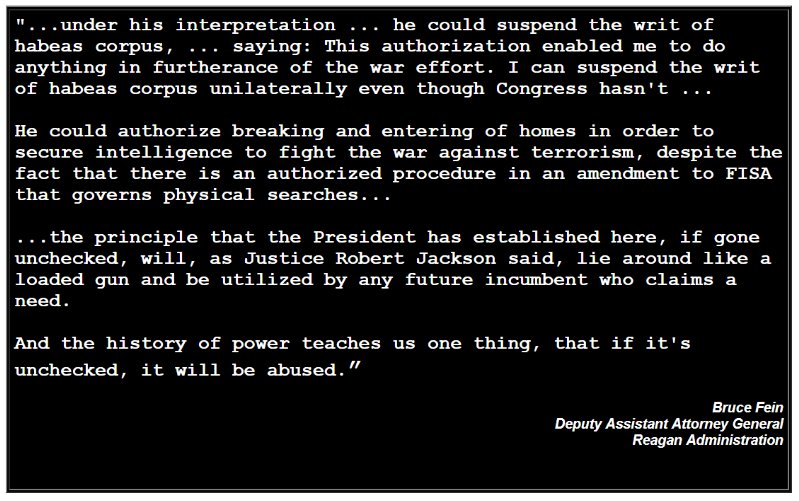

So let me walk you through how Barr's going to tie this into #Section230...

But Barr would get exactly what he proposed in July from the #EARNIT Act, which @LindseyGrahamSC and @SenBlumenthal are expected to release shortly

i.e., abandon strong encryption

That's essentially what @cagoldberglaw & @annietmcadams have argued. It's not an accident that they're on panel #1

We'll see what happens in the morning!

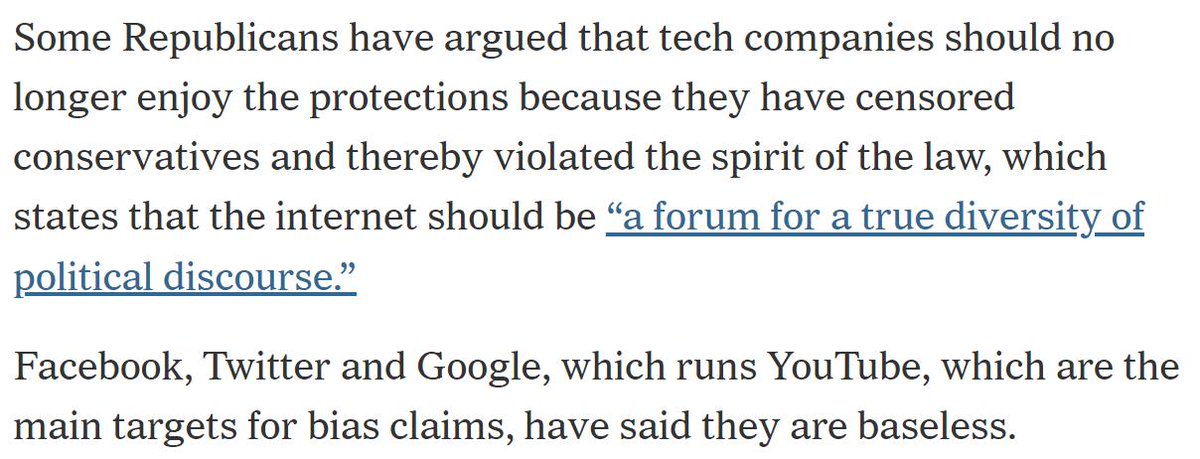

washingtonpost.com/politics/trump…

morningconsult.com/opinions/bill-…

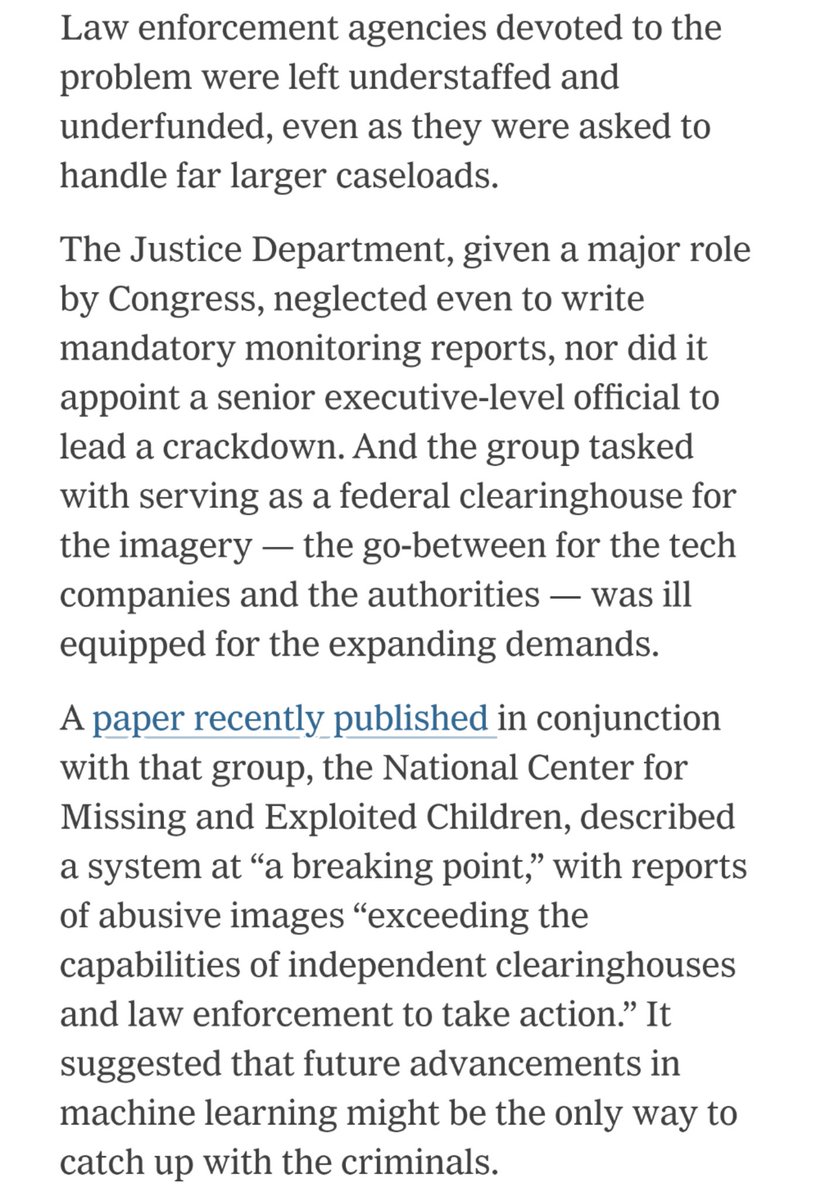

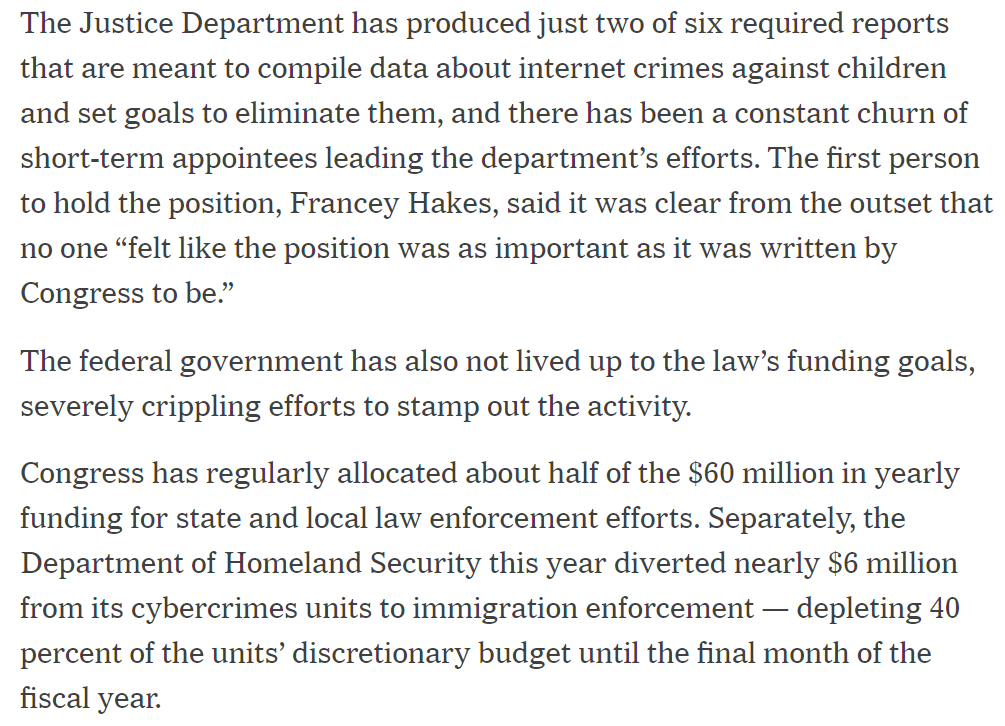

Meanwhile, CSAM goes unpunished—bc of underfunding, NOT encryption

nytimes.com/2020/02/19/pod…

WHY AREN'T WE DEALING WITH *THAT* PROBLEM?

Also problematic was that the law makes research on CSAM nearly impossible...

Amending #Section230 may sooth populist rage over "Big Tech" but it's not the fix children need

1) Actually spending the funds Congress allocated to law enforcement in 2008

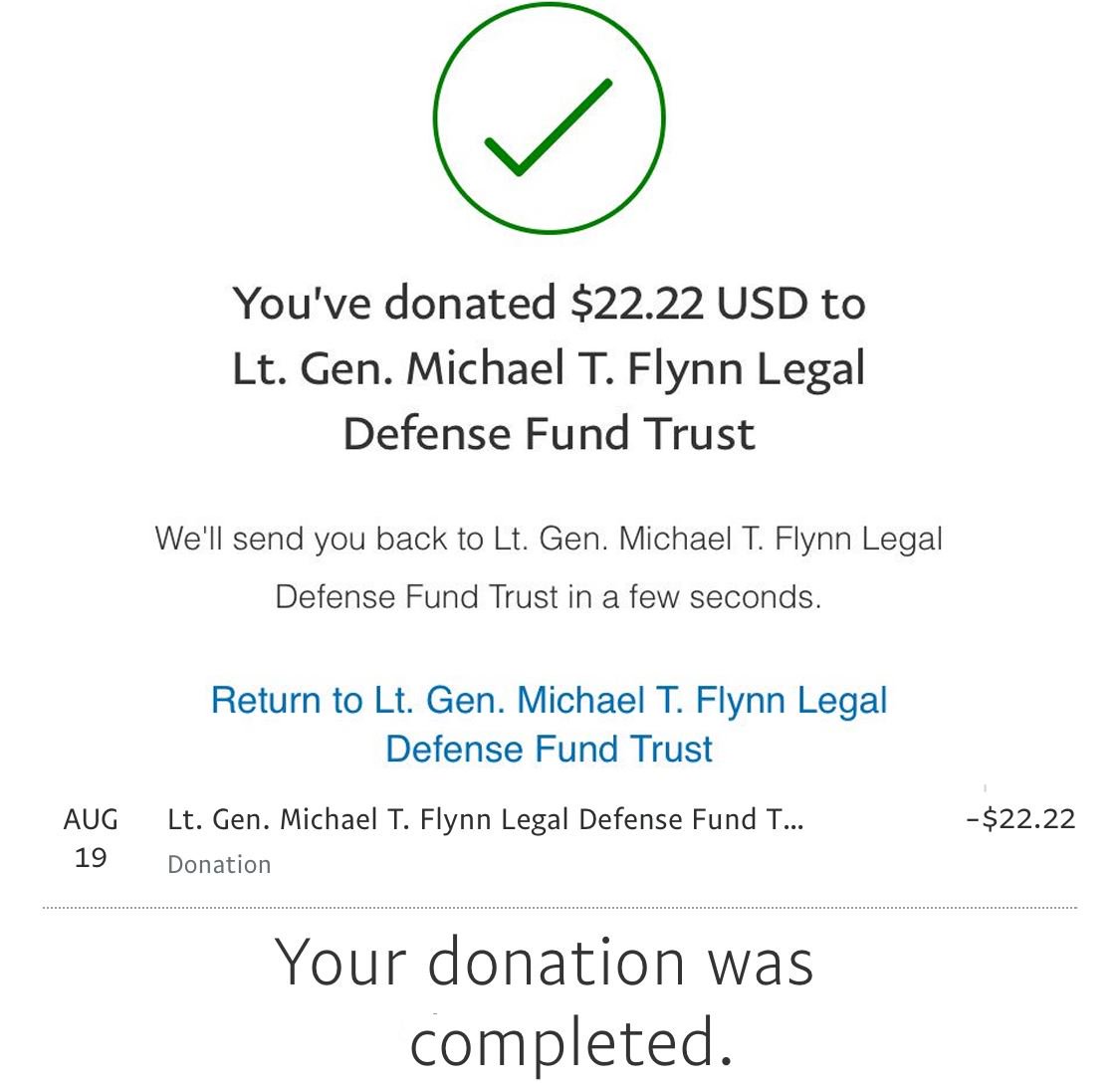

2) Not diverting CSAM funding to fight Trump's Captain-Ahab-level war on immigration

nytimes.com/interactive/20…

- Barr

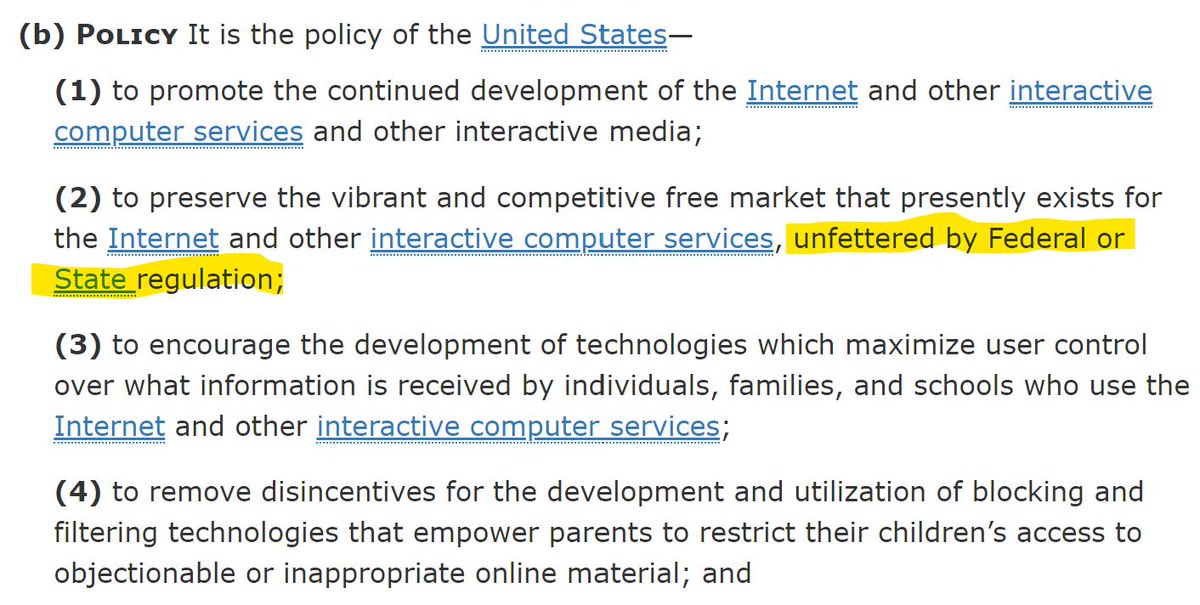

But #Section230 protects ALL online services, not just the big ones. Making websites liable for user speech would hurt small sites and startups while entrenching the market power of BigTech

Barr just started. He immediately turns to Section 230, tying the issue to competition policy. "Not all concerns about online platforms fall within antitrust..."

Basically limitless!

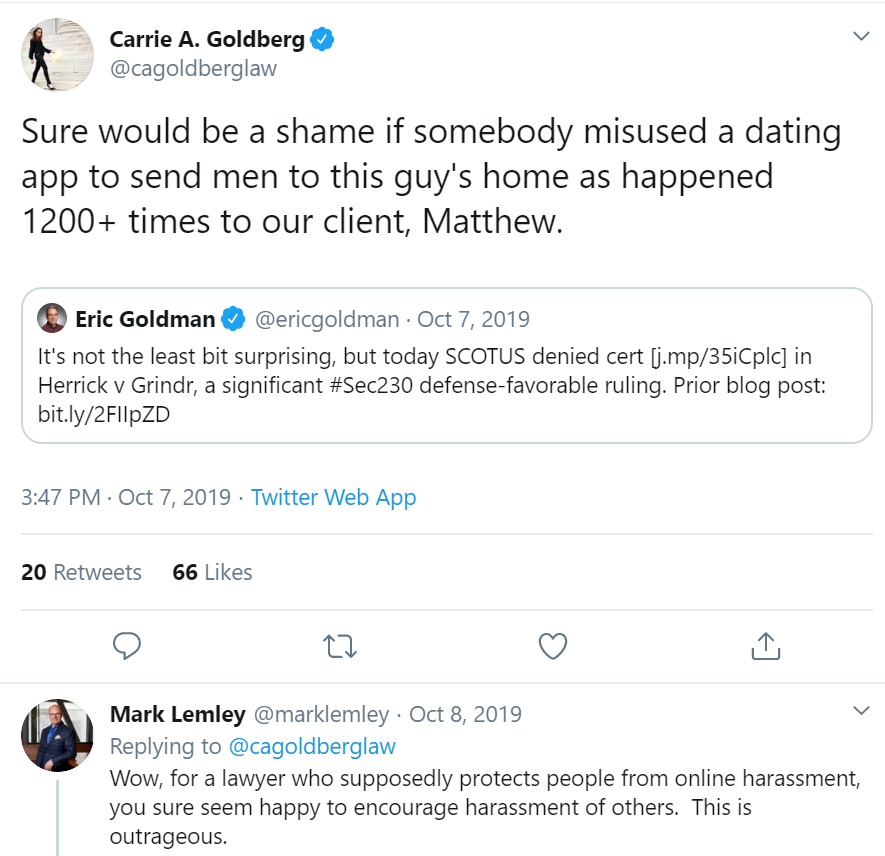

- @cagoldberglaw

FALSE! Section 230 doesn't protect websites from:

- Federal criminal prosecution

- IP law

- Privacy law

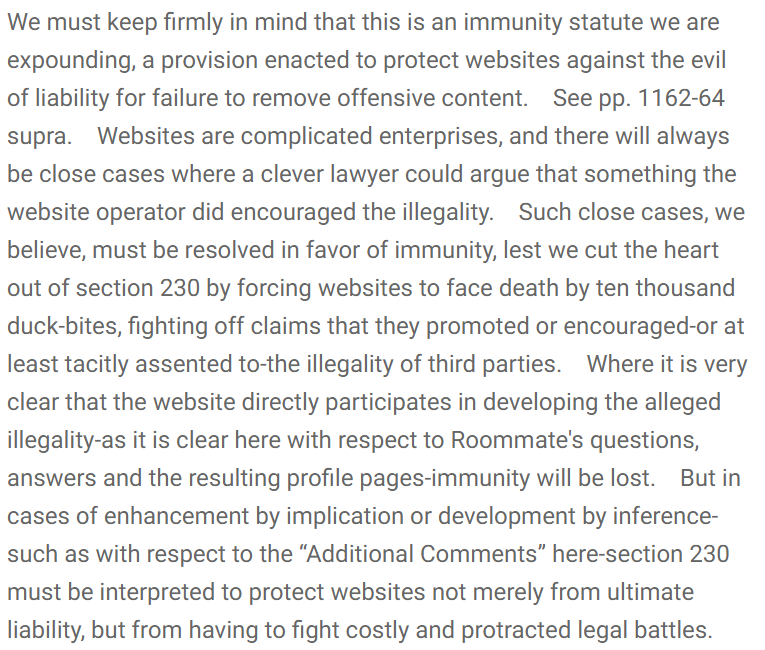

- OR content that the platform is responsible for creating, even in part -- as in the Roommates case

Crocodile tears from a plaintiff's lawyer

Here's how she responded when @EricGoldman, the leading 230 law professor, merely blogged about the Supreme Court's decision not to take her case

She could be sued. And under her view, so could Twitter

So, should Twitter delete her tweet?

MAYBE Twitter survives (or more likely just Facebook), but EVERY WEBSITE WITH USER COMMENTS would likely no longer host user content

- if Congress hadn't intended #Section230 protections to be broader than defamation, they wouldn't have included exceptions for criminal law, IP & privacy

- The lawsuits @cagoldberglaw would have failed under normal law anyway

...

- limiting #Section230 protections will expose websites to "death by 10,000 duckbites" (Judge Kozinski's term in the Roommates case)

- this will simply cause websites to do (even) less to moderate harmful user content

- @PJCarome

The REAL issue is that making websites liable for imperfect moderation of user content creates a perverse incentive not to try!

#Section230

Again, her proposed revision to #Section230 could force to take down HER tweet urging her followers to do the EXACT SAME THING to

@ericgoldman

#Hypocrisy

Wanna lock in the incumbents? Sure, kill Section 230. Upstarts couldn't get off the ground.

The First Amendment protects a LOT of awful speech. But 230 allows responsible platforms to TRY to moderate

-@PJCarome

But:

a) #Section230 has NEVER hindered enforcement of federal law and

b) the real problem is underfunding enforcement, as the NYTimes notes today

(a) revenge porn is an entirely separate problem from CSAM, which NO ONE defends as "free speech"

(b) yes, #Section230 means we need a consistent national law on NCP

So let's talk about that, NOT rolling back #Section230

What utter bullshit. SESTA-FOSTA hasn't actually been used. CONVICTING Backpage executives had a HUGE deterrent effect

#Section230 makes all this moderation/innovation possible!

Yup. The problem isn't #Section230. It's that Congress has spent only HALF what it committed to spend on policing CSAM in 2008 and Trump has diverted some of that money to immigration

We're quite open to updating criminal law

But we don't need to amend #Section230, as SESTA did, to do that

It's a total red herring

- @Klonick

#Section230

- @Klonick

Exactly right. Backpage DESERVED to be prosecuted under criminal law and was BEFORE SESTA amended #Section230

Again, 230 has NEVER hindered enforcement of federal criminal law

-@ma_franks

Is she even listening to her fellow panelists?!? They have STRONG economic incentives to take down harmful content AND 230 doesn't protect sites from criminal liability

Nothing she's said addresses THAT problem

- @MA_Franks

Um, #MeToo? #BlackLivesMatter?

Hello? Have you not noticed ANY of the great things the Internet has enabled?!?

#Section230 isn't the problem

- @Klonick

#Section230

- @MSchruers

- @MSchruers

Yes, but that's largely because companies are becoming more cautious in what they report (eg normal baby pics), so it's impossible to tell whether the problem is really growing or we're just measuring it better

which merely serves to illustrates the imperfection of technology -- precisely the reason we have #Section230 in the first place

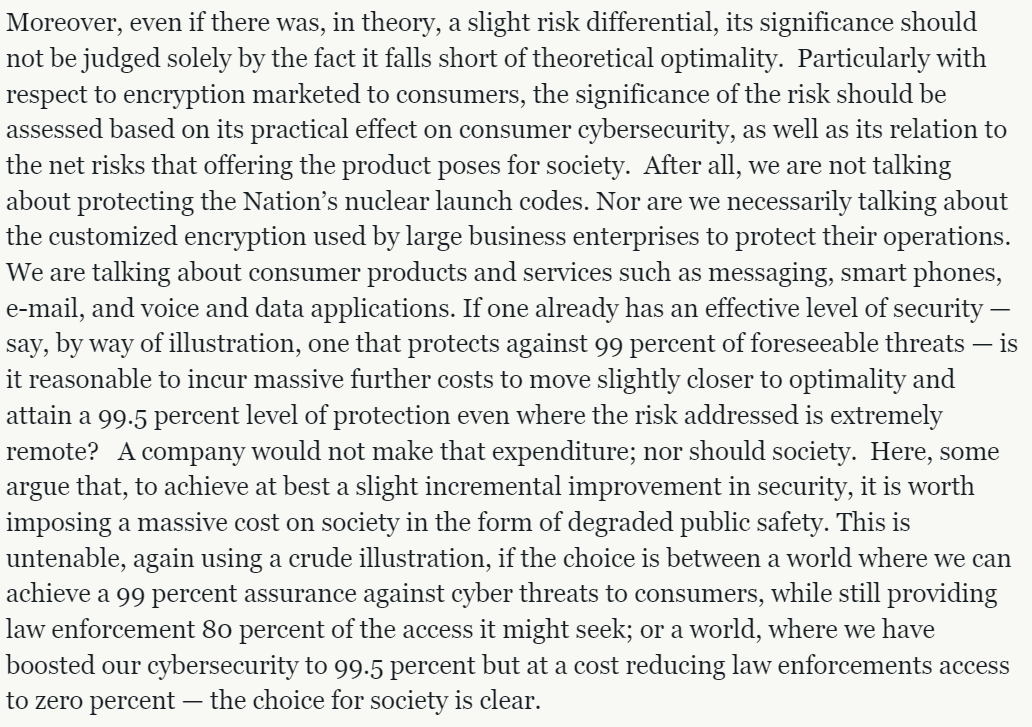

Content moderation at scale is impossible, and making companies liable will cause them to do LESS, not more

OK, so... how the heck are websites supposed to do content moderation perfectly?

#Section230 removes the disincentive not to try to moderate content

The answer is clearly the latter

THAT is why we have #Section230

@MA_Franks just isn't doing that

Read the principles on 230 we drafted last summer

digitalcommons.law.scu.edu/cgi/viewconten…

- @Klonick quoting @jackbalkin

And (re FB livestream being used for shootings): FB didn't kill anyone. They merely made it transparent.

#Section230

- @MSchruers

Right. #Section230 isn't the problem

That's insane. States criminalize all kinds of things, from defamation to panhandling

We need a consistent body of federal criminal laws to govern the Internet. Let the states enforce THAT

-@MSchruers

So don't let #Section230 be used to ban encryption!

Yet law enforcement lacks the resources to go after PUBLIC CSAM on Bing, as NYTimes reports today nytimes.com/2020/02/19/pod…

#Section230

Also, it's crazy to claim that 230 didn't foresee encryption, an issue Congress had already hotly debated for years by 1996

@MSchruers

But federalism demands that we don't have one state/locality to write laws for US

- @MSchruers

1) fund federal enforcement

2) rather than allowing them to enforce a crazy-quilt of varying criminal law, deputize them to enforce a consistent body of FEDERAL criminal law--a very surgical amendment to #Section230

Federal criminal law ALREADY authorizes such deputization, as we pointed out in the #SESTA debate. We were completely ignored

law.cornell.edu/uscode/text/28…

#Section230 won't stop them!

It's pure corporate-on-corporate warfare. It has nothing to do with victims or abuse

- @neil_chilson

- @NewsCEO

Bullshit. Take away Section 230 and every newspaper and broadcaster would take down user commenting from their websites because they can't possibly police comments at scale

- @NewsCEO

But without #Section230, it would be IMPOSSIBLE for today's user-centered Internet to exist. This is key...

THAT is why we have #Section230

It's NOT a special subsidy. It's essential to the web

THAT just wouldn't happened without #Section230

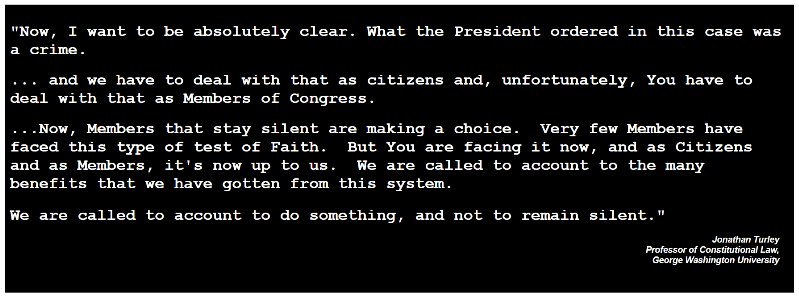

I suppose my calling #BillBarr a mob lawyer and calling for his impeachment might explain my not being on a panel 🤔

digitalcommons.law.scu.edu/cgi/viewconten…

We can't eliminate ALL harms, nor do we expect to offline...

- @EricGoldman

- @neil_chilson

- @neil_chilson

- @JuliePSamuels

Moreover, a size cap presents a huge problem for startups trying to grow, and would crate a moat around Big Tech

- @NewsCEO

Yes, the "press" is mentioned but the Supreme Court has regularly extended full First Amendment protection to the Internet, not just media that the old industry's trade association CEO thinks "matter more"

ICYMI: justice.gov/opa/speech/att…

I explained the #Section230-#encryption connection in an op-ed this morning: morningconsult.com/opinions/bill-…

And in a @TechDirt essay last week: techdirt.com/articles/20200…

- @JuliePSamuels

- @JuliePSamuels

- @EricGoldman