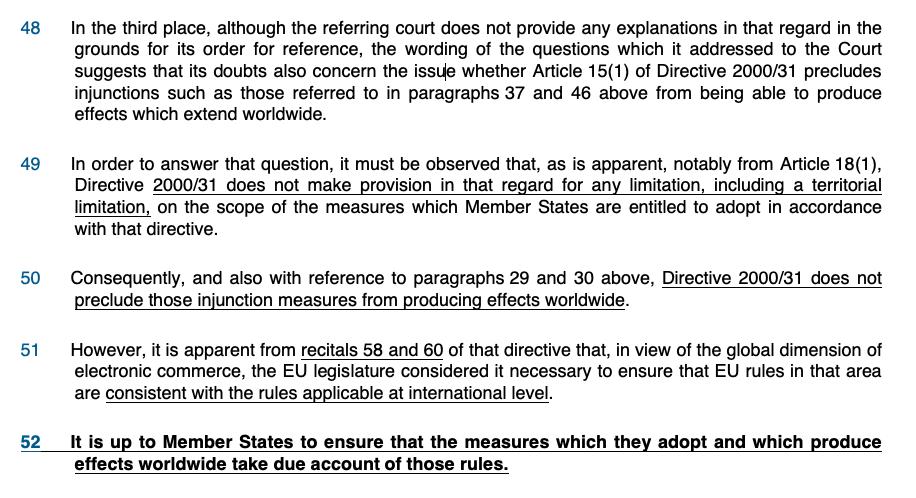

@DanSvantesson talks about that part here: linkedin.com/pulse/bad-news… 7/

Trampel), and “fascist party” (Faschistenpartei). 16/

Over and out. 23/

1. This creates massive business uncertainty for small platforms. Being told "don't worry, you may never face such an order and it will be proportionate" will not reassure investors.